AI tutors, with a little human help, offer âreliableâ instruction, study finds

AI tutors, with a little human help, offer âreliableâ instruction, study finds

An AI-powered tutor, paired with a human helper and individual-level data on a studentâs proficiency, can outperform a human alone, with near-flawless results, a new study suggests.

The results could open a new front in the evolving discussion over how to use in schools â and how closely humans must watch it when itâs interacting with kids, reports.

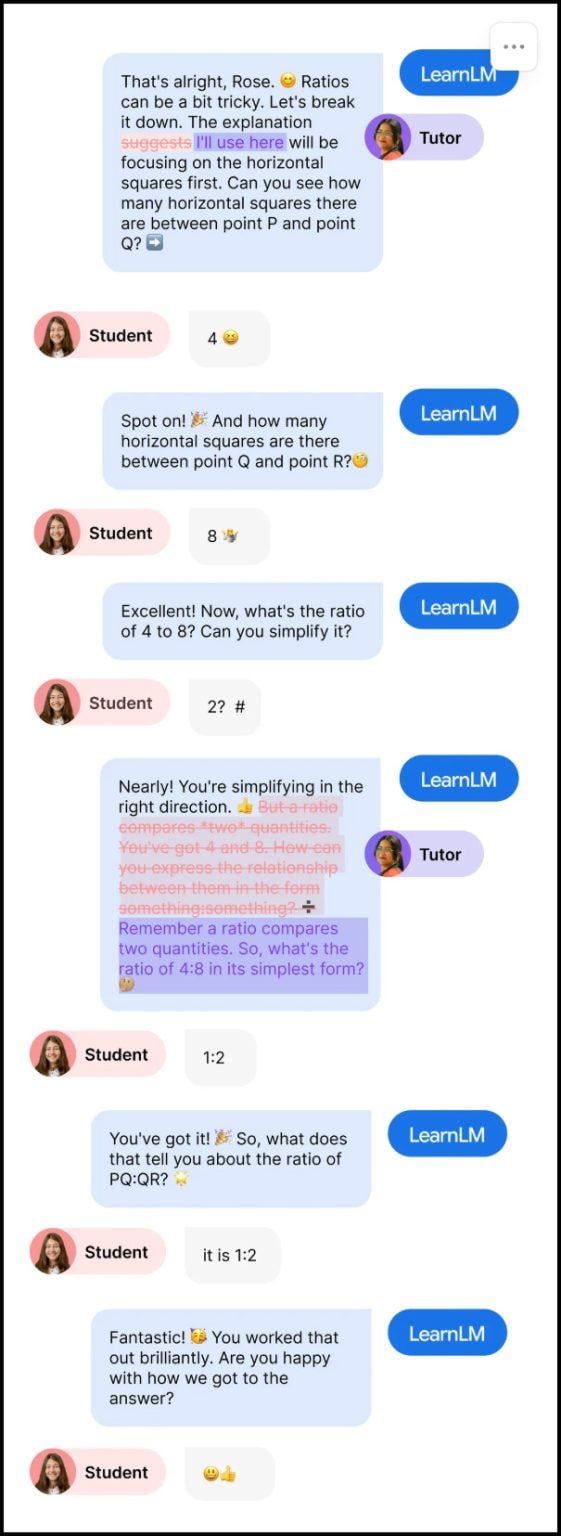

In a involving 165 British secondary school students, ages 13-15, the ed-tech startup put a small group of expert human tutors in charge of a , or LLM, offered by Googleâs . As it tutored students on math problems via Eediâs platform, it drafted replies when students needed help. Before the messages went out, the human tutors got a chance to revise each one to the point where theyâd feel comfortable sending it themselves.

Students didnât know whether they were talking to a human or a chatbot, but they had longer conversations, on average, with the âsupervisedâ AI/human combination than simply with a human tutor, said Bibi Groot, Eediâs chief impact officer.

In the end, students using the supervised AI tutor performed slightly better than those who chatted online via text with human tutors â they were able to solve new kinds of problems on subsequent topics successfully 66.2% of the time, compared to 60.7% with human tutors.

The AI, researchers concluded, was âa reliable sourceâ of instruction. Human tutors approved about three out of four drafted messages with few to no edits.

Students who got both human and AI tutoring were able to correct misconceptions and offer correct answers over 90% of the time, compared to just 65% of the time when they got a âstatic, pre-writtenâ response to their questions.

And the AI only âhallucinated,â or offered factual errors, 0.1% of the time â in 3,617 messages, that amounted to just five hallucinations. It didnât produce any messages that gave the tutors pause over safety.

The results suggest that âpedagogically fine-tunedâ AI could play a role in delivering effective, individualized tutoring at scale, researchers said. Interestingly, students who received support from the AI were more likely to solve new kinds of problems on subsequent topics.

The key to the AIâs success, said Groot, was that researchers gave it access to detailed, âextremely personalizedâ information about what topics students had covered over the previous 20 weeks. That included the topics theyâd struggled with and those theyâd mastered.

âWe know what topics theyâre covering in the next 20 weeks â we know the curriculum. We know the other students in the classroom. We know whether theyâre putting effort into their questions. We know whether theyâre watching videos or not â we know so much about the student without passing any personally identifiable information to the AI.â

That guided the AIâs strategy about whether students needed an extra push or just more support â something an âout-of-the-box, vanilla LLMâ canât do, she said.

âThey donât know anything about what the teacher is teaching in the classroom,â Groot said. âThey donât know what misconceptions or what topics the students are struggling with and what theyâve already mastered, so theyâre not able to dynamically change how they address the topic, as a human tutor would.â

Human tutors, she said, generally have âa really good sense of where the student struggles, because they have some sort of ongoing relation with a student most of the time. An LLM tutor generally doesnât.â

All the same, even master tutors typically donât go into a session knowing a studentâs comprehensive history in a course, including their misconceptions about the material. âAll of that is too much information for a human tutor to read up on and deal with while theyâre having one conversationâ with a student, Groot said.

And theyâre under pressure to respond quickly, âso that the student is not left waiting. And thatâs quite an intensive experience for tutors that leads to a bit of cognitive overload,â she said. The AI doesnât suffer from that. It needs less than a millisecond to read all of those contexts and come up with that first question.â

Even with their personal connection to students, human tutors canât be available 24/7. Groot said Eedi employs about 25 tutors across several time zones who are available to students from 9 a.m. to 10 p.m. every day, but to give students broader access would require hiring âan army of tutors,â she said.

The new findings could encourage schools to use AI as a kind of âfront lineâ tutor, with humans intervening when a student is âderailing the conversation, or they have such a persistent misconception that the AI canât deal with it,â said Groot. âWe think that would be an interesting way to collaborate between the AI and the human, because there is still a really important role for a human tutor. But our human tutors just cannot have conversations with thousands of students at once.â

The new study, published on Nov. 25 on Eediâs site and scheduled to appear in a peer-reviewed journal next year, differed in one important way from recent studies that looked at AI tutoring. Researchers at in October 2024 examined AI-assisted human tutoring, in which tutors primarily drove the conversation. But in that case, the AI acted as a kind of assistant, providing suggestions behind the scenes. In the Eedi study, it was the other way around, with AI driving the conversation and humans overseeing it.

Robin Lake, director of the at Arizona State University, said the study is important in and of itself, but also in the context of broader findings elsewhere suggesting that, with proper training and guidance, âAI can be an incredibly powerful tool â and certainly has a potential to take tutoring to scale in ways that weâve never seen before.â

Under controlled circumstances, she said, itâs also âoutperforming humans â thatâs really important.â

Lake noted a from Harvard researchers that examined results from 194 undergraduates in a large physics class. They presented identical material in class and via an AI tutor and found that students learned âsignificantly more in less timeâ using the tutor. They also felt more engaged and motivated about the material.

Liz Cohen, vice president of policy for 50CAN and author of the recent book â,â said the study provides âvaluable evidenceâ about new kinds of tutoring.

But one of its limitations, she said, is that it relied on 13-to-15-year-olds. âSo immediately I have a lot of questions about if the findings are applicable for younger students, especially using a chat-based model,â which may not be a good one for such students.

She also noted that there are many questions around student persistence with AI tutors, including what happens when students get frustrated or arenât sufficiently engaged in the work?

âI still mostly think that entirely AI tutoring programs are biased towards students who want to do the work or are interested in learning,â Cohen said, âand itâs pretty easy to see that students who arenât bought in or are frustrated are going to give up more readily with an AI tutor.â

She noted that her 12-year-old daughter has experienced problems persisting in an AI-powered math tutoring program. âShe gets frustrated if she canât get the answer and then she doesnât want to do it anymore, so I think we need to figure out that piece of it.â

was produced by and reviewed and distributed by ÂéśšÔ´´.