How AI can help (or hurt) your mental health: 5 prompts experts recommend

How AI can help (or hurt) your mental health: 5 prompts experts recommend

For decades, therapy has been synonymous with the classic image of a client lying on a couch while a therapist scribbles notes. Fast forward to today, and this image is rapidly changing. Imagine instead opening an app and confiding in an AI chatbotāa scenario thatās becoming increasingly common as artificial intelligence reshapes how we approach mental wellness.

These smart technologies touch more aspects of our lives daily, with people using AI for everything from generating fun, action-figure avatars to boosting productivity at work. But the biggest change is how AI is starting to connect with our inner lives.

According to , AI-powered therapy and companionship will dominate generative AI use cases in 2025. This raises a crucial question: Are we witnessing a mental health revolution or heading toward uncharted risks?

Like any transformative tool, AI presents a double-edged sword for psychological well-being. While critics rightly sound alarms about the potential dangers, the reality is that this technology isnāt going anywhere. So rather than resisting, a better approach might be to ask: How can we use AI to support mental health in ways that are safe, ethical, and actually helpful?

To explore this question, consulted three human experts (yes, actual people) working at the intersection of behavioral health and AI innovation. Hereās what they want you to know.

The potential benefits of AI for mental health

Letās state the obvious: AI doesnāt have a therapy license or clinical experience. So can it actually help? āYes, if used responsibly,ā says , PhD, associate professor of biomedical data science and psychiatry at Dartmouthās Geisel School of Medicine, specializing in AI and mental health.

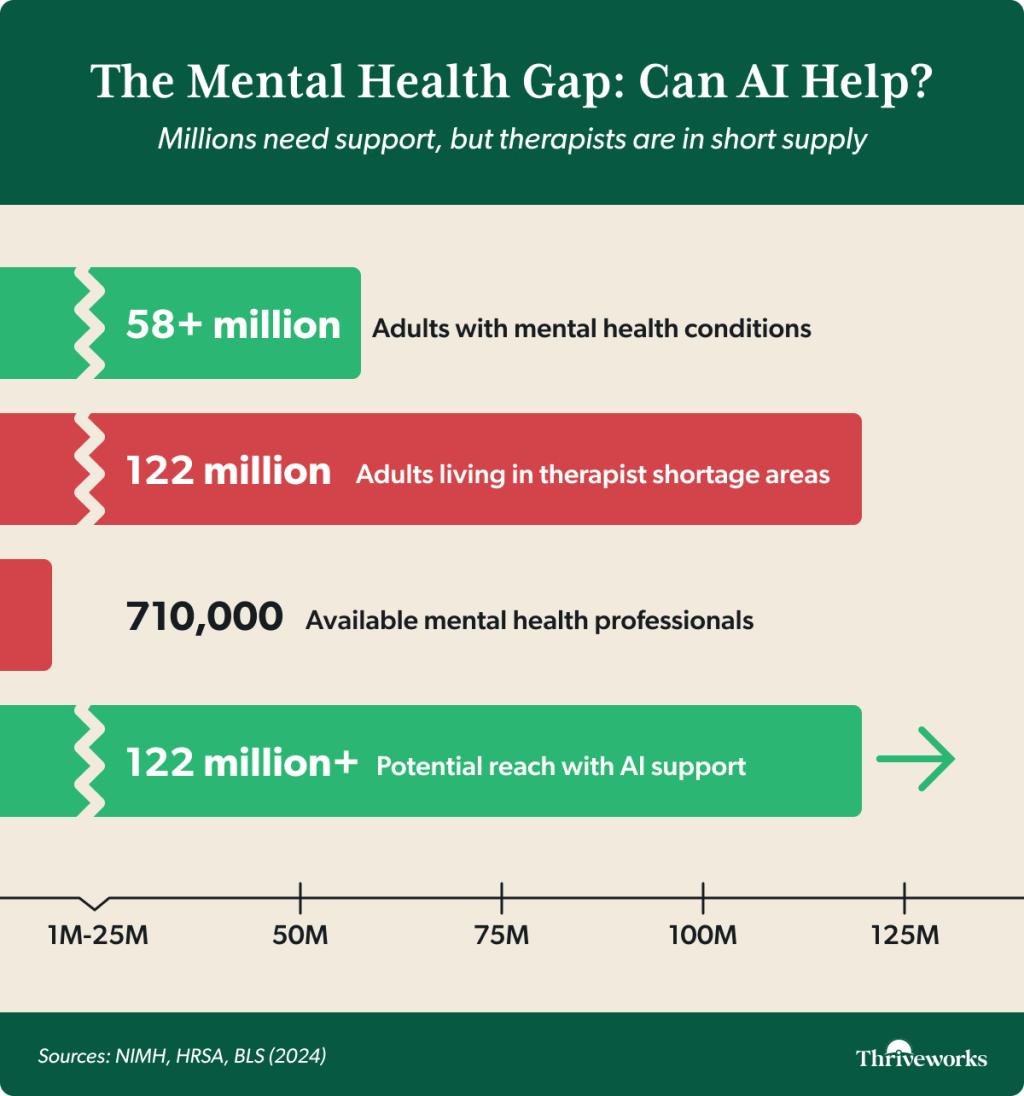

The numbers tell an urgent story: Nearly U.S. adults (58+ million people) have a mental health condition, while live in areas with therapist shortages. There simply arenāt enough mental health professionals to help everyone who is struggling. Online therapy helps bridge the gap, but .

āThe need for innovative solutions is urgent,ā researchers noted in a on AI and mental health. AIās key advantage? Immediate access.

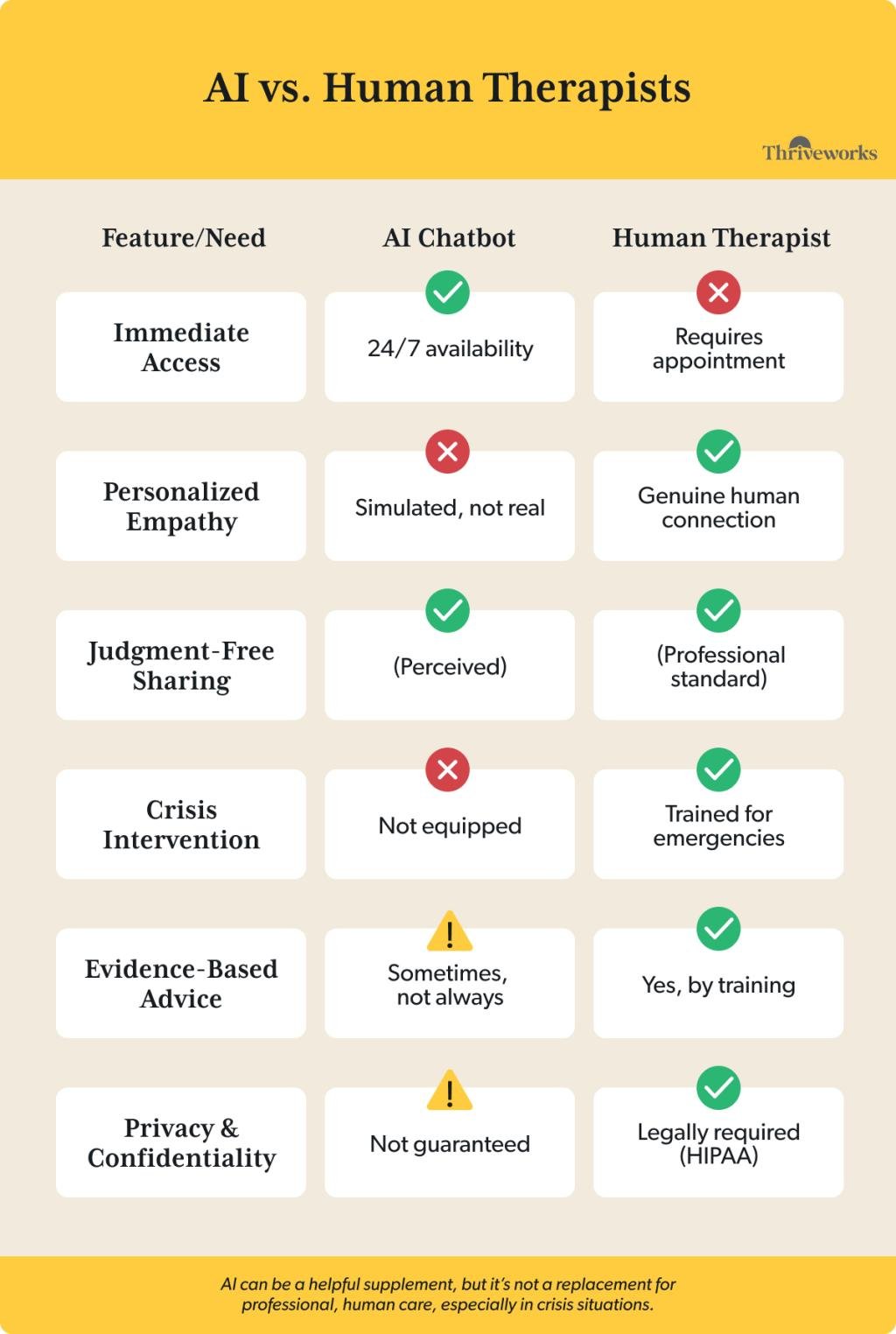

āIf you donāt have access to a therapist, AI is better than nothing,ā says , PhD, associate director for the International Institute of Internet Interventions for Health at Palo Alto University. āIt can help you unlock the roadblocks you have at the very right momentāwhen youāre struggling.ā This is a nice benefit, even for those who are in ārealā therapy, since youāll have to wait for your designated appointment time to meet with your therapist.

Another benefit : Vulnerability without fear. āPeople often share more with AI,ā Bunge says. While therapists wonāt judge, many people still hesitate to disclose sensitive issues to a human being.

More research is needed on the safety and effectiveness of large language models (LLMs) like ChatGPT in addressing mental health struggles, especially in the long term, but early research shows promise. In , 80% of participants found ChatGPT helpful for managing their symptoms. But based on Jacobsonās own research on the topic, he cautions: āPeople generally liked using ChatGPT as a therapist and reported benefits, but there was also a significant amount of people didnāt, complaining about its āguardrails.āā This refers to the LLM shutting down conversations about issues that require higher levels of care, like suicide. (Read on to learn why this might be a good thing.)

The must-know risks of AI-generated support

If youāre using generative AI for mental health support, especially if you arenāt also working with a human therapist, proceed with caution. āThereās great benefit, but thereās also great risk,ā Jacobson says.

Key concerns to remember:

- Untrustworthy sources: AI learns from the information available on the internet. And as we all know by now, you canāt trust everything you read online.

- Convincing but risky: āAI almost always sounds incredibly fluent, even when itās giving you harmful responses,ā Jacobson says. āIt can sound convincing, but that doesnāt necessarily mean itās actually quality or evidence-based careāand sometimes itās harmful.ā

- No human safeguards: No matter how much it might feel like youāre talking to a real person, āAI isnāt a human who is looking out for you,ā says , PhD, associate director of UMass Bostonās Center for Evidence-Based Mentoring. āResponses can be incorrect, culturally biased, or harmful.ā

- Privacy risks: āThese arenāt HIPAA-compliant machines,ā Jacobson says. We donāt really know how AI might collect, store, or use the information you share. Werntz agrees, adding: āI wouldnāt share anything that I wasnāt comfortable with others reading. We canāt assume these tools follow the same confidentiality rules as therapists or medical providers.ā

The bottom line: AI has potentialāif we focus on its strengths while acknowledging its limits. Next, weāll explore exactly what that looks like.

5 ways AI can support mental well-beingāincluding expert-backed prompts

āAI can absolutely help with mental health when used the right way,ā Werntz says, ābut it canāt replace therapy.ā It works best as a supplement or bridge to in-person care.

Here are five safe and effective ways to use it:

1. Let AI help you name what youāre feeling (cautiously).

AI canāt diagnose you, but it can help you spot patterns and give you a sense of what might be going on, Bunge says. Therapists spend 45+ minutes assessing patients and ruling out possibilities to come to a diagnosis, he explains. AI canāt do this as accurately, but it can offer a starting point for those who canāt access therapy yet.

How to try it: List your symptoms in detail (e.g., āIāve been crying daily, struggling to sleep, and feel hopelessā) and ask: āWhat mental health conditions might align with these experiences?ā Always add: āRemember this isnāt a diagnosis. What should I consider?ā This encourages a cautious response.

Critical reminder: Treat AIās responses like a rough draft, not a final answer.

2. Match your symptoms to proven treatments.

Whether you already know your diagnosis or AI just came up with a hypothesis for you, you can move on to the next step: exploring clinically validated treatment options, Bunge says.

Ask: āWhat are evidence-based treatments for [specific symptom or problem]?ā

Follow up with: āWhat are the first steps I could take if I choose [treatment name]?ā

For example, this might translate to: āWhat are gold-standard treatments for panic attacks? How could I safely start CBT techniques at home?ā

3. Get clarity on different types of therapy.

If youāre new to psychotherapy, you might not know the many treatment options available or what therapy actually involves. āPeople often donāt know what therapy looks like or have misconceptions from media portrayals,ā Werntz says. āAI offers a safe way to explore what to expect.ā

Try prompts like:

- āIām considering therapy for PTSD. What are effective talk therapies, and what are the pros and cons of each?ā

- āIām nervous about starting therapy. Can you explain what typically happens in a first session?ā

- āExplain EMDR in simple terms, like Iām a 7th grader.ā You can use this one to learn more about any specific treatment type.

4. Reframe negative thoughts with AIās help.

If youāre stuck in a negative thought loop or dealing with a tough situation, AI can help you see things differently, Werntz says. For example, if a friend didnāt text back and you start thinking, āThey must hate me,ā AI can guide you to rethink that.

Try prompts like:

- āIām feeling down because my friends didnāt text me back after I asked them to dinner. Can you help me reframe my thoughts? Iāve heard about cognitive restructuring but donāt know how it works.ā

- āIām stressed about work and parenting and keep thinking Iām failing. Can you help me challenge these thoughts?ā

You can use this approach for any situation, just tell AI what youāre struggling with and ask it to help you break the cycle of negative thinking.

5. Combat and spark real-life connections.

While AI canāt replace human relationships, it can help bridge the gap for those feeling lonely. āItās not particularly helpful or healthy when people substitute AI for real connection,ā Jacobson says, but itās a great tool to help start conversations in real-life.

āAI can be creative in ways that I could never be,ā Werntz says, ābut you always have to balance its suggestions with human judgment.ā Think of it as a practice buddy, not a replacement for real socializing.

How to try it:

- āIām new to this city. What are some ways I can meet people?ā

- āWhat are neutral, low-pressure conversation starters?ā or āWhatās a fun, non-awkward way to start a chat with coworkers?ā

- āI want to make more friends. Help me practice small talk so I feel a little more confident.ā

When itās not safe to use AI for mental health

AI isnāt a trained human caregiver. In these critical situations, relying on it isnāt just unhelpful, itās dangerous:

- Youāre having suicidal thoughts or self-harm urges. Call 911 or immediately. āThere will always be a real person to help in a crisis,ā Werntz says. And thatās what you need in this situation.

- Youāre at risk of harming others. This is another time to contact a human professional by calling 911 or 988 right away, Bunge says.

- Youāre experiencing psychosis symptoms. Those who have any disorder with (e.g., schizophrenia or bipolar disorder) should not rely on AI for support, Bunge says. Seek in-person treatment ASAP to reduce risks of violence or suicide.

- You have an eating disorder. While thereās potential for AI to help people with eating disorders, there are also specific dangers Jacobson has found through . āAI often encourages weight loss, even if youāre underweight or eating very few calories,ā he says. This can be dangerous both physically and mentally.

In short, AI lacks the judgment to handle emergencies or complex disorders safely. In the situations above, always opt for real human support.

Looking ahead: What AI means for the future of therapy

We have a long way to go, but the future looks bright. āIām quite optimistic,ā Jacobson says. āI think itās actually a huge game changer for the availability of high-quality mental health care.ā

Hereās what to watch for in the coming months:

- Therapy bots: These are AI models specifically programmed for mental health treatment by real humans, unlike general AI models trained on random internet data. In March 2025, Dartmouth researchers, including Jacobson, published the showing that a generative AI therapy chatbot called Therabot significantly reduced symptoms in people with major depressive disorder, generalized disorder, and those at high risk for eating disorders.

- AI co-therapists: Another promising development, noted by Bunge, is AI working alongside both clients and therapists. Clients can use an AI bot (trained by humans for this purpose) whenever they need it, while therapists review the AI chat logs to better understand what clients are experiencing and tailor their support in future sessions.

- More tailored AI options: Itās likely that soon weāll see more AI tools specifically designed for mental health care and trained by humans, rather than relying on general LLMs for support.

Despite these advances, real-life therapists remain essential. āHuman care will always be huge and necessary for a pretty large segment of the population,ā Jacobson says. AI, even when trained by humans, canāt replace , Werntz adds. āAI can never replace another person truly caring for us.ā

was produced by and reviewed and distributed by Ā鶹Ō““.